Experimental Design: Types, Examples & Methods

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

1. Independent Measures

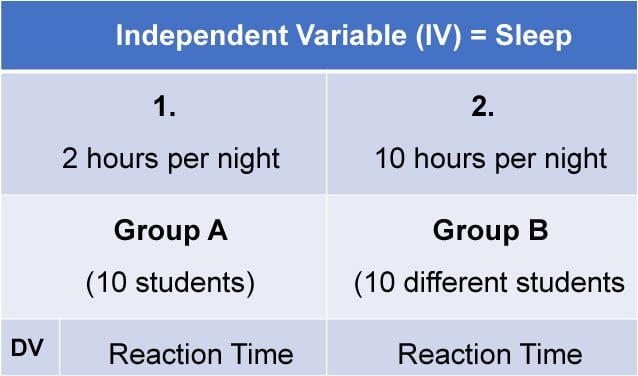

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

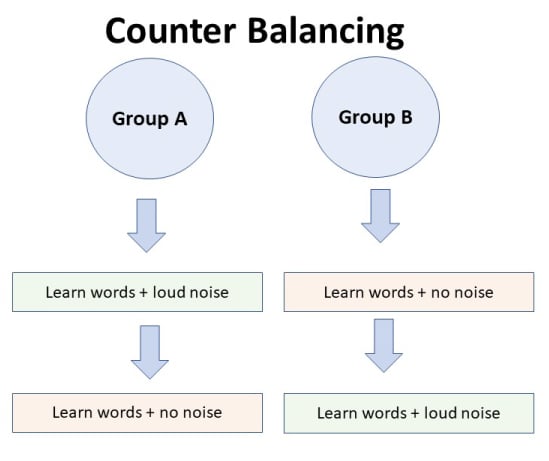

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

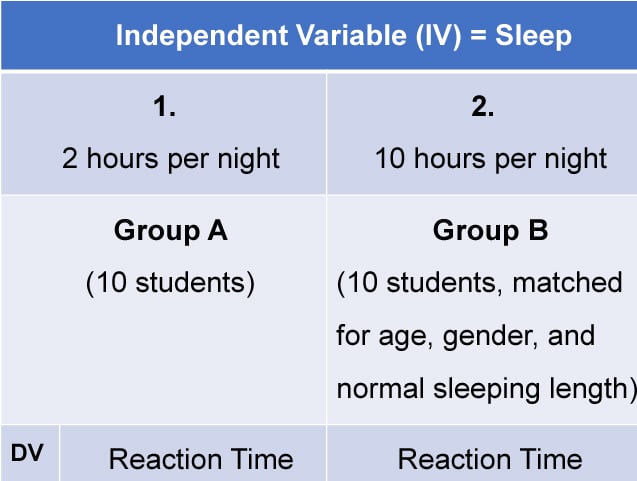

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

The 3 Types Of Experimental Design

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

Learn about our Editorial Process

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

Experimental design refers to a research methodology that allows researchers to test a hypothesis regarding the effects of an independent variable on a dependent variable.

There are three types of experimental design: pre-experimental design, quasi-experimental design, and true experimental design.

Experimental Design in a Nutshell

A typical and simple experiment will look like the following:

- The experiment consists of two groups: treatment and control.

- Participants are randomly assigned to be in one of the groups (‘conditions’).

- The treatment group participants are administered the independent variable (e.g. given a medication).

- The control group is not given the treatment.

- The researchers then measure a dependent variable (e.g improvement in health between the groups).

If the independent variable affects the dependent variable, then there should be noticeable differences on the dependent variable between the treatment and control conditions.

The experiment is a type of research methodology that involves the manipulation of at least one independent variable and the measurement of at least one dependent variable.

If the independent variable affects the dependent variable, then the researchers can use the term “causality.”

Types of Experimental Design

1. pre-experimental design.

A researcher may use pre-experimental design if they want to test the effects of the independent variable on a single participant or a small group of participants.

The purpose is exploratory in nature , to see if the independent variable has any effect at all.

The pre-experiment is the simplest form of an experiment that does not contain a control condition.

However, because there is no control condition for comparison, the researcher cannot conclude that the independent variable causes change in the dependent variable.

Examples include:

- Action Research in the Classroom: Action research in education involves a teacher conducting small-scale research in their classroom designed to address problems they and their students currently face.

- Case Study Research : Case studies are small-scale, often in-depth, studies that are notusually generalizable.

- A Pilot Study: Pilot studies are small-scale studies that take place before the main experiment to test the feasibility of the project.

- Ethnography: An ethnographic research study will involve thick research of a small cohort to generate descriptive rather than predictive results.

2. Quasi-Experimental Design

The quasi-experiment is a methodology to test the effects of an independent variable on a dependent variable. However, the participants are not randomly assigned to treatment or control conditions. Instead, the participants already exist in representative sample groups or categories, such as male/female or high/low SES class.

Because the participants cannot be randomly assigned to male/female or high/low SES, there are limitations on the use of the term “causality.”

Researchers must refrain from inferring that the independent variable caused changes in the dependent variable because the participants existed in already formed categories before the study began.

- Homogenous Representative Sampling: When the research participant group is homogenous (i.e. not diverse) then the generalizability of the study is diminished.

- Non-Probability Sampling: When researchers select participants through subjective means such as non-probability sampling, they are engaging in quasi-experimental design and cannot assign causality.

See more Examples of Quasi-Experimental Design

3. True Experimental Design

A true experiment involves a design in which participants are randomly assigned to conditions, there exists at least two conditions (treatment and control) and the researcher manipulates the level of the independent variable (independent variable).

When these three criteria are met, then the observed changes in the dependent variable (dependent variable) are most likely caused by the different levels of the independent variable.

The true experiment is the only research design that allows the inference of causality .

Of course, no study is perfect, so researchers must also take into account any threats to internal validity that may exist such as confounding variables or experimenter bias.

- Heterogenous Sample Groups: True experiments often contain heterogenous groups that represent a wide population.

- Clinical Trials: Clinical trials such as those required for approval of new medications are required to be true experiments that can assign causality.

See More Examples of Experimental Design

Experimental Design vs Observational Design

Experimental design is often contrasted to observational design. Defined succinctly, an experimental design is a method in which the researcher manipulates one or more variables to determine their effects on another variable, while observational design involves the observation and analysis of a subject without influencing their behavior or conditions.

Observational design primarily involves data collection without direct involvement from the researcher. Here, the variables aren’t manipulated as they would be in an experimental design.

An example of an observational study might be research examining the correlation between exercise frequency and academic performance using data from students’ gym and classroom records.

The key difference between these two designs is the degree of control exerted in the experiment . In experimental studies, the investigator controls conditions and their manipulation, while observational studies only allow the observation of conditions as independently determined (Althubaiti, 2016).

Observational designs cannot infer causality as well as experimental designs; but they are highly effective at generating descriptive statistics.

For more, read: Observational vs Experimental Studies

Generally speaking, there are three broad categories of experiments. Each one serves a specific purpose and has associated limitations . The pre-experiment is an exploratory study to gather preliminary data on the effectiveness of a treatment and determine if a larger study is warranted.

The quasi-experiment is used when studying preexisting groups, such as people living in various cities or falling into various demographic categories. Although very informative, the results are limited by the presence of possible extraneous variables that cannot be controlled.

The true experiment is the most scientifically rigorous type of study. The researcher can manipulate the level of the independent variable and observe changes, if any, on the dependent variable. The key to the experiment is randomly assigning participants to conditions. Random assignment eliminates a lot of confounds and extraneous variables, and allows the researchers to use the term “causality.”

For More, See: Examples of Random Assignment

Baumrind, D. (1991). Parenting styles and adolescent development. In R. M. Lerner, A. C. Peterson, & J. Brooks-Gunn (Eds.), Encyclopedia of Adolescence (pp. 746–758). New York: Garland Publishing, Inc.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Loftus, E. F., & Palmer, J. C. (1974). Reconstruction of automobile destruction: An example of the interaction between language and memory. Journal of Verbal Learning and Verbal Behavior, 13 (5), 585–589.

Matthew L. Maciejewski (2020) Quasi-experimental design. Biostatistics & Epidemiology, 4 (1), 38-47. https://doi.org/10.1080/24709360.2018.1477468

Thyer, Bruce. (2012). Quasi-Experimental Research Designs . Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195387384.001.0001

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 23 Achieved Status Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Defense Mechanisms Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Theory of Planned Behavior Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 18 Adaptive Behavior Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 23 Achieved Status Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Ableism Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 25 Defense Mechanisms Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Theory of Planned Behavior Examples

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental design is a structured approach used to conduct scientific experiments. It enables researchers to explore cause-and-effect relationships by controlling variables and testing hypotheses. This guide explores the types of experimental designs, common methods, and best practices for planning and conducting experiments.

Experimental Design

Experimental design refers to the process of planning a study to test a hypothesis, where variables are manipulated to observe their effects on outcomes. By carefully controlling conditions, researchers can determine whether specific factors cause changes in a dependent variable.

Key Characteristics of Experimental Design :

- Manipulation of Variables : The researcher intentionally changes one or more independent variables.

- Control of Extraneous Factors : Other variables are kept constant to avoid interference.

- Randomization : Subjects are often randomly assigned to groups to reduce bias.

- Replication : Repeating the experiment or having multiple subjects helps verify results.

Purpose of Experimental Design

The primary purpose of experimental design is to establish causal relationships by controlling for extraneous factors and reducing bias. Experimental designs help:

- Test Hypotheses : Determine if there is a significant effect of independent variables on dependent variables.

- Control Confounding Variables : Minimize the impact of variables that could distort results.

- Generate Reproducible Results : Provide a structured approach that allows other researchers to replicate findings.

Types of Experimental Designs

Experimental designs can vary based on the number of variables, the assignment of participants, and the purpose of the experiment. Here are some common types:

1. Pre-Experimental Designs

These designs are exploratory and lack random assignment, often used when strict control is not feasible. They provide initial insights but are less rigorous in establishing causality.

- Example : A training program is provided, and participants’ knowledge is tested afterward, without a pretest.

- Example : A group is tested on reading skills, receives instruction, and is tested again to measure improvement.

2. True Experimental Designs

True experiments involve random assignment of participants to control or experimental groups, providing high levels of control over variables.

- Example : A new drug’s efficacy is tested with patients randomly assigned to receive the drug or a placebo.

- Example : Two groups are observed after one group receives a treatment, and the other receives no intervention.

3. Quasi-Experimental Designs

Quasi-experiments lack random assignment but still aim to determine causality by comparing groups or time periods. They are often used when randomization isn’t possible, such as in natural or field experiments.

- Example : Schools receive different curriculums, and students’ test scores are compared before and after implementation.

- Example : Traffic accident rates are recorded for a city before and after a new speed limit is enforced.

4. Factorial Designs

Factorial designs test the effects of multiple independent variables simultaneously. This design is useful for studying the interactions between variables.

- Example : Studying how caffeine (variable 1) and sleep deprivation (variable 2) affect memory performance.

- Example : An experiment studying the impact of age, gender, and education level on technology usage.

5. Repeated Measures Design

In repeated measures designs, the same participants are exposed to different conditions or treatments. This design is valuable for studying changes within subjects over time.

- Example : Measuring reaction time in participants before, during, and after caffeine consumption.

- Example : Testing two medications, with each participant receiving both but in a different sequence.

Methods for Implementing Experimental Designs

- Purpose : Ensures each participant has an equal chance of being assigned to any group, reducing selection bias.

- Method : Use random number generators or assignment software to allocate participants randomly.

- Purpose : Prevents participants or researchers from knowing which group (experimental or control) participants belong to, reducing bias.

- Method : Implement single-blind (participants unaware) or double-blind (both participants and researchers unaware) procedures.

- Purpose : Provides a baseline for comparison, showing what would happen without the intervention.

- Method : Include a group that does not receive the treatment but otherwise undergoes the same conditions.

- Purpose : Controls for order effects in repeated measures designs by varying the order of treatments.

- Method : Assign different sequences to participants, ensuring that each condition appears equally across orders.

- Purpose : Ensures reliability by repeating the experiment or including multiple participants within groups.

- Method : Increase sample size or repeat studies with different samples or in different settings.

Steps to Conduct an Experimental Design

- Clearly state what you intend to discover or prove through the experiment. A strong hypothesis guides the experiment’s design and variable selection.

- Independent Variable (IV) : The factor manipulated by the researcher (e.g., amount of sleep).

- Dependent Variable (DV) : The outcome measured (e.g., reaction time).

- Control Variables : Factors kept constant to prevent interference with results (e.g., time of day for testing).

- Choose a design type that aligns with your research question, hypothesis, and available resources. For example, an RCT for a medical study or a factorial design for complex interactions.

- Randomly assign participants to experimental or control groups. Ensure control groups are similar to experimental groups in all respects except for the treatment received.

- Randomize the assignment and, if possible, apply blinding to minimize potential bias.

- Follow a consistent procedure for each group, collecting data systematically. Record observations and manage any unexpected events or variables that may arise.

- Use appropriate statistical methods to test for significant differences between groups, such as t-tests, ANOVA, or regression analysis.

- Determine whether the results support your hypothesis and analyze any trends, patterns, or unexpected findings. Discuss possible limitations and implications of your results.

Examples of Experimental Design in Research

- Medicine : Testing a new drug’s effectiveness through a randomized controlled trial, where one group receives the drug and another receives a placebo.

- Psychology : Studying the effect of sleep deprivation on memory using a within-subject design, where participants are tested with different sleep conditions.

- Education : Comparing teaching methods in a quasi-experimental design by measuring students’ performance before and after implementing a new curriculum.

- Marketing : Using a factorial design to examine the effects of advertisement type and frequency on consumer purchase behavior.

- Environmental Science : Testing the impact of a pollution reduction policy through a time series design, recording pollution levels before and after implementation.

Experimental design is fundamental to conducting rigorous and reliable research, offering a systematic approach to exploring causal relationships. With various types of designs and methods, researchers can choose the most appropriate setup to answer their research questions effectively. By applying best practices, controlling variables, and selecting suitable statistical methods, experimental design supports meaningful insights across scientific, medical, and social research fields.

- Campbell, D. T., & Stanley, J. C. (1963). Experimental and Quasi-Experimental Designs for Research . Houghton Mifflin Company.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference . Houghton Mifflin.

- Fisher, R. A. (1935). The Design of Experiments . Oliver and Boyd.

- Field, A. (2013). Discovering Statistics Using IBM SPSS Statistics . Sage Publications.

- Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences . Routledge.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Quasi-Experimental Research Design – Types...

Research Methods – Types, Examples and Guide

Transformative Design – Methods, Types, Guide

One-to-One Interview – Methods and Guide

Correlational Research – Methods, Types and...

Case Study – Methods, Examples and Guide

Experimental Designs

March 7, 2021 - paper 2 psychology in context | research methods.

- Back to Paper 2 - Research Methods

In the study of Psychology there are three experimental designs that are typically used. An experimental design is the way in which the participants are used across the different conditions in a laboratory experiment. In a laboratory experiment there is always one control condition ( this is a group of participants matched as closely as possible to the experimental group that don’t receive the IV. They act as a baseline to compare against the experimental group ), along with one or more experimental conditions. Researchers have to decide whether or not they want to use the same or different participants in the control and the experimental condition.

There are three experimental designs that a researcher can use.

The Three Experimental Designs:

(1) Independent Measures this is when a researcher uses participants once in either the control condition or the experimental condition.

(2) Repeated Measures this is when participants are used in each condition, both the control condition and the experimental condition(s).

(3) Matched Pairs this is when participants take part in only one condition (either the control or the experimental condition), however, participants are matched in terms of certain characteristics that may affect the outcome of the study, (for example, in a study looking at memory performance, participants across the control and the experimental condition could be matched in terms of age due to the fact that age can have an effect on memory performance).

Exam Tip: In the exam, you need to be able to outline each experimental design in terms of how the participants are used, in addition, you also need to be able to look at a description of research and suggest what experimental design a researcher would be best using. The best way to justify the choice of experimental design is to know two strengths and two weaknesses of each design.

Evaluation of the 3 Experimental Designs:

Revising the evaluation of the three experimental designs can be made easy by learning the following items and by being able to apply these items as either a strength or weakness for each of the designs.

(1) The number of participants required for a study (the less participants required in a study the better. It can be quite difficult for researchers to obtain participants for their study especially if funding is limited and the researcher is unable to pay the participants for taking part in their research). This can be seen to be a strength of the repeated measures design less participants are needed as each participant takes place in both the control and the experimental condition. However, this can be seen to be a weakness of the independent measures design as participants only take part in one condition meaning that twice as many participants are needed to take part in a study where there is a control and experimental condition.

(2) Participant variables everybody is unique and different. Remember, when conducting research, the experimenter wants the only differences between the experimental and control condition to be the manipulation of the independent variable. If we use different participants in the control and experimental condition, not only is the IV different across these two conditions, a potential extraneous variable (EV) is introduced in that there are participant differences. Research is better when there are no individual differences, therefore a strength of the repeated measures design is that the same participants are used in each condition and therefore there are no participant variables. However, when using a design like the independent measures design, participant variables are created which may lower a study’s internal validity.

(3) Demand characteristics : are when participants pick up on clues and cues in the experiment which help them to guess what the research is about and predict what the experimenter is looking for. When participants pick up on such clues and cues they often change the way they would naturally behave they act in a way that will give the experimenter what they want so that they appear ‘normal,’ or they may deliberately act in a way that is different to what the experimenter is looking for to avoid being a conformist. Again, demand characteristics are an extraneous/confounding variable that experimenters do not want in their study (remember, the experimenter is looking to observe the effects of just the IV on the DV). A weakness of the repeated measures design is that it can create demand characteristics (participants taking part in each condition can help them to guess the aim of the study). A strength of the independent measures design is that participants only take part in one condition therefore demand characteristics are minimised.

(4) Order effects: When participants repeat a task, results can be affected by order effects. Order effects include:

* Performing less well than you would normally due to boredom or fatigue

* Performance improving through practice in the first condition

A strength of the independent measures design is that because participants only take part in one condition participants are less likely to become border or practiced and therefore the experiment is more likely to measure natural real-life behaviour. On the other hand, a weakness of the repeated measures design is that because participants take part in all the conditions in the research they are more likely to become bored or practiced as they progress through the conditions which will result in measuring unnatural behaviour thus lowering internal validity.

Evaluation:

The table below outlines the strengths and weaknesses associated with the three experimental designs.

- Psychopathology

- Social Psychology

- Approaches To Human Behaviour

- Biopsychology

- Research Methods

- Issues & Debates

- Teacher Hub

- Terms and Conditions

- Privacy Policy

- Cookie Policy

- [email protected]

- www.psychologyhub.co.uk

We're not around right now. But you can send us an email and we'll get back to you, asap.

Start typing and press Enter to search

Cookie Policy - Terms and Conditions - Privacy Policy

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

12.1 Experimental design: What is it and when should it be used?

Learning objectives.

- Define experiment

- Identify the core features of true experimental designs

- Describe the difference between an experimental group and a control group

- Identify and describe the various types of true experimental designs

Experiments are an excellent data collection strategy for social workers wishing to observe the effects of a clinical intervention or social welfare program. Understanding what experiments are and how they are conducted is useful for all social scientists, whether they plan to use this methodology or simply understand findings of experimental studies. An experiment is a method of data collection designed to test hypotheses under controlled conditions. Students in my research methods classes often use the term experiment to describe all kinds of research projects, but in social scientific research, the term has a unique meaning and should not be used to describe all research methodologies.

Experiments have a long and important history in social science. Behaviorists such as John Watson, B. F. Skinner, Ivan Pavlov, and Albert Bandura used experimental designs to demonstrate the various types of conditioning. Using strictly controlled environments, behaviorists were able to isolate a single stimulus as the cause of measurable differences in behavior or physiological responses. The foundations of social learning theory and behavior modification are found in experimental research projects. Moreover, behaviorist experiments brought psychology and social science away from the abstract world of Freudian analysis and towards empirical inquiry, grounded in real-world observations and objectively-defined variables. Experiments are used at all levels of social work inquiry, including agency-based experiments that test therapeutic interventions and policy experiments that test new programs.

Several kinds of experimental designs exist. In general, designs that are true experiments contain three key features: independent and dependent variables, pretesting and posttesting, and experimental and control groups. In a true experiment, the effect of an intervention is tested by comparing two groups. One group is exposed to the intervention (the experimental group , also known as the treatment group) and the other is not exposed to the intervention (the control group ).

In some cases, it may be immoral to withhold treatment from a control group within an experiment. If you recruited two groups of people with severe addiction and only provided treatment to one group, the other group would likely suffer. For these cases, researchers use a comparison group that receives “treatment as usual,” but experimenters must clearly define what this means. For example, standard substance abuse recovery treatment involves attending twelve-step programs like Alcoholics Anonymous or Narcotics Anonymous meetings. A substance abuse researcher conducting an experiment may use twelve-step programs in their comparison group and use their experimental intervention in the experimental group. The results would show whether the experimental intervention worked better than normal treatment, which is useful information. However, using a comparison group is a deviation from true experimental design and is more associated with quasi-experimental designs.

Importantly, participants in a true experiment need to be randomly assigned to either the control or experimental groups. Random assignment uses a random process, like a random number generator, to assign participants into experimental and control groups. Random assignment is important in experimental research because it helps to ensure that the experimental group and control group are comparable and that any differences between the experimental and control groups are due to random chance. We will address more of the logic behind random assignment in the next section.

In an experiment, the independent variable is the intervention being tested. In social work, this could include a therapeutic technique, a prevention program, or access to some service or support. Social science research may have a stimulus rather than an intervention as the independent variable, but this is less common in social work research. For example, a researcher may provoke a response by using an electric shock or a reading about death.

The dependent variable is usually the intended effect of the researcher’s intervention. If the researcher is testing a new therapy for individuals with binge eating disorder, their dependent variable may be the number of binge eating episodes a participant reports. The researcher likely expects their intervention to decrease the number of binge eating episodes reported by participants. Thus, they must measure the number of episodes that occurred before the intervention (the pretest) and after the intervention (the posttest ).

Let’s put these concepts in chronological order to see how an experiment runs from start to finish. Once you’ve collected your sample, you’ll need to randomly assign your participants to the experimental group and control group. Then, you will give both groups your pretest, which measures your dependent variable, to see what your participants are like before you start your intervention. Next, you will provide your intervention, or independent variable, to your experimental group. Keep in mind that many interventions take a few weeks or months to complete, particularly therapeutic treatments. Finally, you will administer your posttest to both groups to observe any changes in your dependent variable. Together, this is known as the classic experimental design and is the simplest type of true experimental design. All of the designs we review in this section are variations on this approach. Figure 12.1 visually represents these steps.

An interesting example of experimental research can be found in Shannon K. McCoy and Brenda Major’s (2003) [1] study of peoples’ perceptions of prejudice. In one portion of this multifaceted study, all participants were given a pretest to assess their levels of depression. No significant differences in depression were found between the experimental and control groups during the pretest. Then, participants in the experimental group were asked to read an article suggesting that prejudice against their own racial group is severe and pervasive, while participants in the control group were asked to read an article suggesting that prejudice against a racial group other than their own is severe and pervasive. Clearly, their independent variables were not interventions or treatments for depression, but were stimuli designed to elicit changes in people’s depression levels. Upon measuring depression scores during the posttest period, the researchers discovered that those who had received the experimental stimulus (the article citing prejudice against their same racial group) reported greater depression than those in the control group. This is just one of many examples of social scientific experimental research.

In addition to classic experimental design, there are two other ways of designing experiments that are considered to fall within the purview of “true” experiments (Babbie, 2010; Campbell & Stanley, 1963). [2] The posttest-only control group design is almost the same as classic experimental design, except it does not use a pretest. Researchers who use posttest-only designs want to eliminate testing effects , in which a participant’s scores on a measure change because they have already been exposed to it. If you took multiple SAT or ACT practice exams before you took the final one whose scores were sent to colleges, you’ve taken advantage of testing effects to get a better score. Considering the previous example on racism and depression, participants who are given a pretest about depression before being exposed to the stimulus would likely assume that the intervention is designed to address depression. That knowledge can cause them to answer differently on the posttest than they otherwise would. Please do not assume that your participants are oblivious. More likely than not, your participants are actively trying to figure out what your study is about.

In theory, if the control and experimental groups have been randomly determined and are therefore comparable, then a pretest is not needed. However, most researchers prefer to use pretests so they may assess change over time within both the experimental and control groups. Researchers who want to account for testing effects and additionally gather pretest data can use a Solomon four-group design. In the Solomon four-group design , the researcher uses four groups. Two groups are treated as they would be in a classic experiment—pretest, experimental group intervention, and posttest. The other two groups do not receive the pretest, though one receives the intervention. All groups are given the posttest. Table 12.1 illustrates the features of each of the four groups in the Solomon four-group design. By having one set of experimental and control groups that complete the pretest (Groups 1 and 2) and another set that does not complete the pretest (Groups 3 and 4), researchers using the Solomon four-group design can account for testing effects in their analysis.

Solomon four-group designs are challenging to implement because they are time-consuming and resource-intensive. Researchers must recruit enough participants to create four groups and implement interventions in two of them. Overall, true experimental designs are sometimes difficult to implement in a real-world practice environment. Additionally, it may be impossible to withhold treatment from a control group or randomly assign participants in a study. In these cases, pre-experimental and quasi-experimental designs can be used, however the differences in rigor from true experimental designs leave their conclusions more open to critique.

Key Takeaways

- True experimental designs require random assignment.

- Control groups do not receive an intervention, and experimental groups receive an intervention.

- The basic components of a true experiment include a pretest, posttest, control group, and experimental group.

- Testing effects may cause researchers to use variations on the classic experimental design.

Classic experimental design – uses random assignment, an experimental, a control group, pre-testing, and post-testing

Comparison group – a group in quasi-experimental designs that receives “treatment as usual” instead of no treatment

Control group – the group in an experiment that does not receive the intervention

Experiment – a method of data collection designed to test hypotheses under controlled conditions

Experimental group- the group in an experiment that receives the intervention

Posttest- a measurement taken after the intervention

Posttest-only control group design- a type of experimental design that uses random assignment, an experimental, a control group, and a posttest, but does not utilize a pretest

Pretest- a measurement taken prior to the intervention

Random assignment-using a random process to assign people into experimental and control groups

Solomon four-group design- uses random assignment, two experimental and two control groups, pretests for half of the groups, and posttests for all

Testing effects- when a participant’s scores on a measure change because they have already been exposed to it

True experiments- a group of experimental designs that contain independent and dependent variables, pretesting and post testing, and experimental and control groups

Image attributions

exam scientific experiment by mohamed_hassan CC-0

- McCoy, S. K., & Major, B. (2003). Group identification moderates emotional response to perceived prejudice. Personality and Social Psychology Bulletin , 29, 1005–1017. ↵

- Babbie, E. (2010). The practice of social research (12th ed.). Belmont, CA: Wadsworth; Campbell, D., & Stanley, J. (1963). Experimental and quasi-experimental designs for research . Chicago, IL: Rand McNally. ↵

Scientific Inquiry in Social Work Copyright © 2018 by Matthew DeCarlo is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

IMAGES

COMMENTS

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs. Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control ...

3. True Experimental Design. A true experiment involves a design in which participants are randomly assigned to conditions, there exists at least two conditions (treatment and control) and the researcher manipulates the level of the independent variable (independent variable). ... Heterogenous Sample Groups: True experiments often contain ...

Posttest-Only Control Group Design: Measures only the outcome after an intervention, with both experimental and control groups. Example: Two groups are observed after one group receives a treatment, and the other receives no intervention. 3. Quasi-Experimental Designs

the four combinations of the two levels of each factor. Correspondingly, the 2 x 3 design will have six treatment groups, and the 2 x 2 x 2 design will have eight treatment groups. ... Solomon four-group design Quasi-Experimental Designs Quasi-experimental designs are almost identical to true experimental designs, but lacking one key ingredient ...

For example, a bone density study has three experimental groups—a control group, a stretching exercise group, and a jumping exercise group. In a between-subjects experimental design, scientists randomly assign each participant to one of the three groups. In a within-subjects design, all subjects experience the three conditions sequentially ...

Experimental Designs March 7, 2021 - Paper 2 Psychology in Context | Research Methods Back to Paper 2 - Research Methods In the study of Psychology there are three experimental designs that are typically used. An experimental design is the way in which the participants are used across the different conditions in a laboratory experiment. […]

Randomized Solomon Four-Group Design. Nonrandomized Control Group Pretest-Posttest Design. 1. Randomized Control-Group Pretest Posttest Design. The pre-test post-test control group design is also called the classic controlled experimental design. The design includes both a control and a treatment group.

Glossary. Classic experimental design- uses random assignment, an experimental, a control group, pre-testing, and post-testing. Comparison group- a group in quasi-experimental designs that receives "treatment as usual" instead of no treatment. Control group- the group in an experiment that does not receive the intervention. Experiment- a method of data collection designed to test ...

The three group experimental design catego ries differ on these design features that account for differences in the levels of experimental control. The inal key feature (i.e., quality of implementation) cuts across all three catego ries of experimental designs. True experimental designs include clear procedures for address

ARTHUR—PSYC 302 (EXPERIMENTAL PSYCHOLOGY) 17C LECTURE NOTES [10/11/17] EXPERIMENTAL RESEARCH DESIGNS—PAGE 3 4. Solomon Four-Group design • Generally accepted as the best design, but requires a large number of participants. PRETEST TREATMENT POSTTEST GROUP I YES YES YES GROUP II NO YES YES GROUP III YES NO YES GROUP IV NO NO YES • Some possible comparisons